BEV Vance - A Clearer View For Autonomous Futures

The way we understand how self-driving vehicles see the world is changing, quite dramatically. For a long time, figuring out what’s around a car just from camera pictures has been a tricky puzzle, sometimes leading to mixed-up information. It's a bit like trying to draw a whole room just by looking through a keyhole, which, you know, can be a little tough. Yet, there is a strong push to create a very distinct, bird's-eye perspective, often called BEV, because it truly helps with a lot of important things. This approach, you see, offers a much more direct way for cars to make sense of their surroundings, and it’s becoming rather central to how these clever machines operate.

This particular way of seeing things, the BEV or Bird's Eye View, gives us a top-down look, which is incredibly useful for self-driving technology. It helps vehicles get a better grasp of their environment, making it easier to figure out where things are and what’s happening nearby. Basically, it helps the car understand its place in the world and what other things are around it, like other cars or pedestrians, and where they are located. It’s a perspective that, in some respects, simplifies a lot of the complex calculations that autonomous systems need to perform.

When it comes to how self-driving cars perceive their surroundings, BEV perception is a really significant method. It takes what a camera sees and turns it into this overhead map. This transformation is pretty important because it means the car can then directly identify things like lane markings or other vehicles on a flattened, easy-to-read surface. It’s a bit like having a constantly updating map right there for the car to use, which, as a matter of fact, helps a lot with accuracy and making good decisions on the road.

Table of Contents

- What is the BEV Vance Perspective?

- The BEV Vance Challenge - Seeing from Above

- How Does BEV Vance Help Cars See Lanes?

- BEV Vance and Data Fusion - Bringing Information Together

- Why is BEV Vance Important for Electric Cars?

- The Role of BEV Vance in Mapping Our Roads

- What Steps are Needed for BEV Vance to Work in Autonomous Driving?

- Sharing Knowledge on BEV Vance - A Community Effort

What is the BEV Vance Perspective?

The BEV Vance perspective, as we might call it, refers to the Bird's Eye View, a top-down way of looking at things that is often used in self-driving technology. It helps vehicles get a much better idea of their surroundings. This view is, you know, a very different way of processing information compared to what a human might see directly through a windshield. It’s like having a constant drone view of the car and its immediate area, which can be pretty helpful for making decisions about driving.

Generating this BEV from regular camera images is, actually, a somewhat difficult task. It’s what we call an "ill-posed problem," meaning there isn't one perfect, straightforward answer. If you try to create this overhead view first and then use it to find objects, it can sometimes lead to a mix of small mistakes that add up. It’s a bit like trying to put together a puzzle where some pieces just don't quite fit perfectly, so, you get a slightly distorted picture in the end.

Despite these difficulties, there's a real commitment to creating a clear, obvious BEV feature. The reason for this, you see, is that a distinct BEV feature is incredibly useful for many purposes. It provides a common ground for all sorts of information, making it easier to combine data from different sensors. This common ground, you know, is quite valuable because it helps autonomous systems process diverse inputs in a unified way, which is something very important for reliable operation.

The BEV Vance Challenge - Seeing from Above

No matter what specific method is used for BEV perception, the main idea behind how these systems are built always revolves around what we call "conversion modules." This means finding ways to take all sorts of different input data and map it consistently into the BEV space. It's a fundamental step, really, because whether you're trying to spot objects, outline areas, or even guess where something might go next, all that information needs to be understood in the same overhead format. This unified mapping, you know, is pretty much at the core of making these systems work well.

The difficulty in getting a truly accurate BEV Vance view from camera images alone comes from the fact that cameras only capture a two-dimensional picture of a three-dimensional world. When you try to flatten that into a top-down map, you lose some of the depth information, which can lead to inaccuracies. It's like trying to guess the height of a building just from its shadow; you get some clues, but not the full picture. So, the systems need clever ways to fill in those missing bits of information, which is a big part of the ongoing work in this field, you know.

Even with these hurdles, the explicit BEV feature is so valuable because it offers a standardized way to represent the environment. This standard view makes it much simpler for different parts of the autonomous system to talk to each other and share information. It’s a common language, in a way, for all the various sensors and processing units. This common understanding, you know, helps to reduce confusion and makes the whole system more reliable, which is something very important when we are talking about vehicles driving themselves.

How Does BEV Vance Help Cars See Lanes?

BEV perception helps by taking features from camera images and putting them into that overhead BEV space. Once the image information is in this bird's-eye view, the system can directly find things like lane lines. This is where the learning ability of neural networks really comes into play, as a matter of fact. They can be used to make the detection of these lines very accurate, helping to reduce any small errors in where things are located in space. It's a pretty smart way to make sure the car stays exactly where it needs to be on the road.

Compared to other ways of understanding the car's surroundings, like using occupancy grids or tracking many objects at once, BEV offers a very direct path for things like lane line detection. It streamlines the process, making it more efficient for the car to figure out where the lanes are and how they curve. This streamlined process, you know, is quite helpful because it means the car can react more quickly and smoothly to changes in the road, which is something that really matters for safe driving.

The ability to reduce spatial position deviations is a big benefit of using BEV Vance for lane detection. When the car can precisely locate the lane lines, it means it can follow them more accurately, even around bends or in changing conditions. It’s like having a very precise ruler for the road, which, you know, helps the car keep a steady path. This precision is especially important for comfortable and safe autonomous driving, as it prevents the car from drifting or making sudden movements.

BEV Vance and Data Fusion - Bringing Information Together

The arrival of BEV has made it possible to have a consistent scale for all sorts of different data types. Imagine, for example, a research paper about HDMapNet. The main idea there was to use various kinds of collected data to create really good, yet affordable, high-definition maps. The people who wrote that paper came up with a system that could handle many different sensors, and you could even plug them in and out as needed. This flexibility, you know, helps a lot because it means the system can adapt to different setups and gather all the necessary information.

When all sensor information is put into the same BEV Vance framework, it becomes much easier to combine it. This means data from cameras, radar, lidar, and other sensors can all be fused together on one unified map. This fusion of data helps create a much more complete and reliable picture of the car's surroundings than any single sensor could provide on its own. It’s a bit like putting together pieces of a puzzle from different sources to get the whole picture, which, you know, is quite powerful for complex tasks.

This unified scale is particularly helpful for tasks like creating high-definition maps, which are crucial for autonomous driving. These maps need to be incredibly precise, showing every lane, every curb, and every road sign. By using BEV, all the diverse sensor inputs can contribute to building these detailed maps in a consistent way. This consistency, you see, helps to make the maps more accurate and reliable, which is very important for the safety and performance of self-driving vehicles.

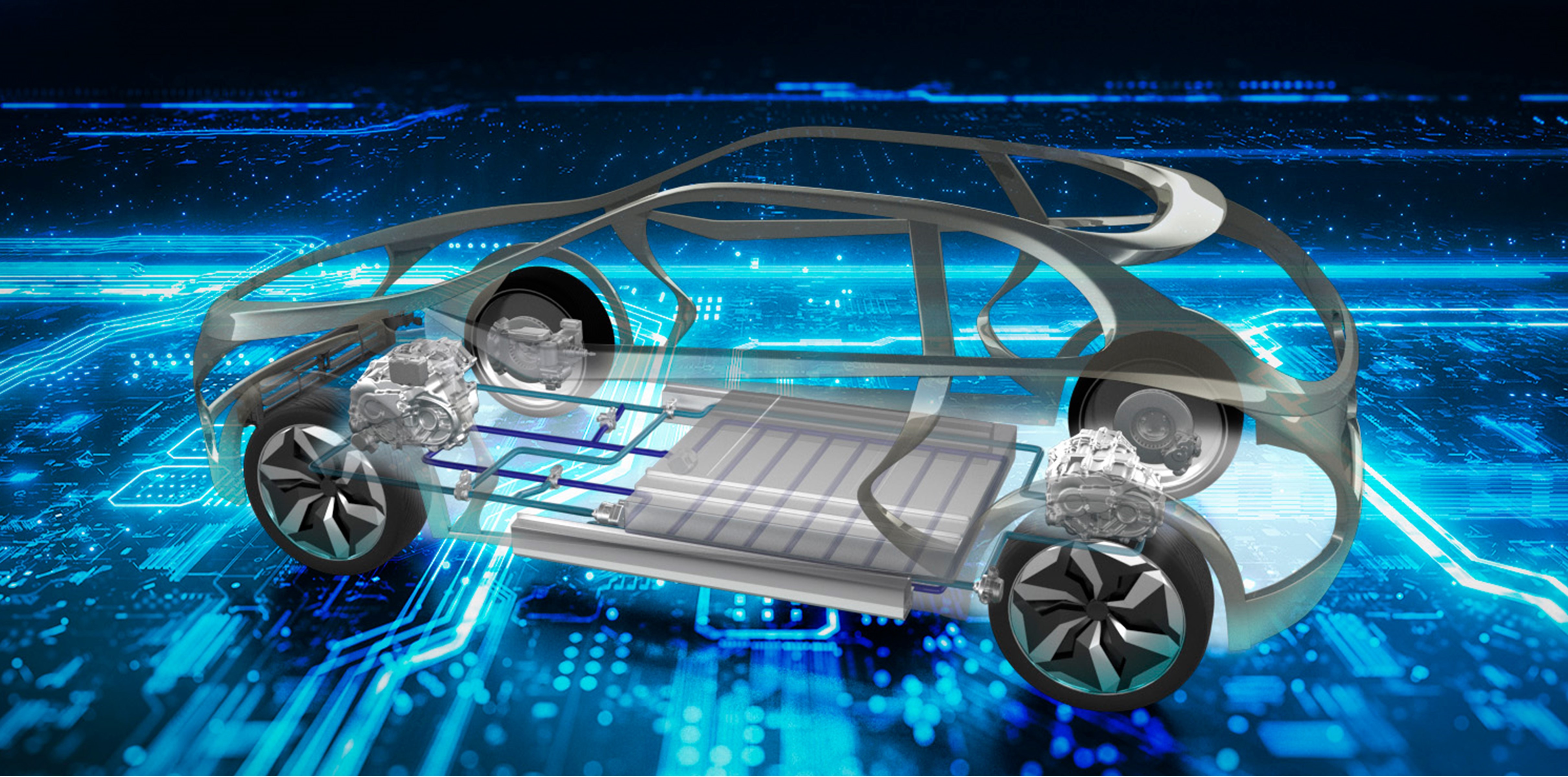

Why is BEV Vance Important for Electric Cars?

The pure electric form of BEV, as in Battery Electric Vehicles, is really the main focus for electric car development right now and for the near future. Just look at how many electric car companies have popped up, thousands of them, with internet companies and even high-tech real estate businesses getting into making cars. This shows pretty clearly that pure electric vehicles are going to quickly take over the market from gasoline cars, especially in bigger cities. It’s a big shift, you know, and BEV technology plays a part in making these cars smarter and safer.

The connection between BEV (Bird's Eye View) perception and BEV (Battery Electric Vehicles) might not seem obvious at first, but it's there. As electric vehicles become more common, especially those aiming for higher levels of autonomy, the need for precise environmental understanding grows. The ability to accurately map surroundings from a top-down view helps these electric cars operate more efficiently and safely. It's a bit like having a clear, digital map always available for the car to consult, which, you know, is pretty beneficial for electric cars that are often packed with advanced tech.

The rise of electric cars means more vehicles on the road that are capable of advanced driving assistance or full autonomy. For these vehicles, a robust perception system is not just a nice-to-have, but a must-have. The BEV Vance perspective offers a streamlined way to process sensor data, which can contribute to the overall performance and safety of these electric cars. This streamlined processing, you see, helps these advanced vehicles make quicker and more accurate decisions, which is something that really matters for future mobility.

The Role of BEV Vance in Mapping Our Roads

When it comes to creating those incredibly detailed high-definition maps that self-driving cars rely on, BEV Vance plays a really important part. These maps are more than just simple navigation tools; they contain precise information about lane markings, road signs, and even the exact height of curbs. By converting all sensor data into a unified BEV format, map makers can build these detailed digital representations of the world with greater accuracy and efficiency. It’s a bit like having a very precise blueprint of every road, which, you know, is absolutely essential for autonomous systems to function properly.

The HDMapNet paper, for example, highlighted how BEV could be used to make these high-definition maps both affordable and effective. This is a big deal because creating and maintaining these maps can be very expensive. By using a multi-sensor setup that can be easily changed or updated, and by mapping all that information into a BEV space, the process becomes much more manageable. This manageability, you see, helps to bring down the cost and speed up the creation of these vital maps, which is something very important for the wider adoption of autonomous driving.

The dynamic pluggable structure for multiple sensors, as mentioned in the context of BEV, means that different types of sensors can be easily added or removed from the system. This flexibility allows for better data collection in various conditions and environments. All the information from these diverse sensors is then projected into the BEV Vance view, creating a comprehensive and unified picture. This comprehensive picture, you know, helps to ensure that the maps are as complete and accurate as possible, which is something that really matters for the reliability of self-driving cars.

What Steps are Needed for BEV Vance to Work in Autonomous Driving?

To really make autonomous driving decision-making and planning work using BEV, there are some pretty important steps that need to happen. First off, you need to gather and label a huge amount of driving data. This includes all sorts of information from the car's sensors, like cameras and radar, as well as detailed map data. This collection process, you know, is quite extensive because the more varied and complete the data, the better the system can learn to understand the world around it.

The data collection and labeling step is perhaps one of the most time-consuming parts. Imagine having to go through countless hours of driving footage and mark every car, every pedestrian, every lane line, and every traffic sign. This meticulous labeling is what teaches the BEV Vance perception system to identify objects and understand their positions from the top-down view. It's a bit like teaching a child to recognize things by showing them many examples, which, you know, takes a lot of effort and patience.

Once the data is collected and properly labeled, the next step involves training the neural networks to process this information and generate accurate BEV representations. This training is where the algorithms learn to convert the raw sensor data into that useful overhead map. It’s a continuous process of refinement, where the system gets better and better at understanding the environment. This continuous improvement, you see, is very important because it helps the autonomous vehicle become more reliable and safer over time.

Sharing Knowledge on BEV Vance - A Community Effort

Platforms like Zhihu, which is a popular Chinese online community for questions and answers, play a role in how knowledge about advanced topics like BEV Vance is shared. It’s a place where people can ask questions, share their experiences, and offer their insights, all with the goal of helping others find answers. This kind of platform, you know, helps to spread information and foster discussion around complex technical subjects, which is pretty valuable for the whole field.

Zhihu, which started back in January 2011, has a mission to help people better share knowledge and experiences. It's known for its serious and professional approach to content. In a way, it serves as a public forum where experts and enthusiasts alike can discuss the finer points of BEV perception, its challenges, and its future. This open exchange of ideas, you see, is very important for the advancement of technologies like autonomous driving, as it allows for collective learning and problem-solving.

The existence of such platforms means that discussions around the intricacies of BEV Vance technology, from its theoretical challenges to its practical applications, can happen openly. People can share code, discuss research papers, and even troubleshoot problems together. It's a collaborative environment that, you know, speeds up the pace of innovation by allowing many minds to contribute to solving shared challenges, which is something that truly helps to move the technology forward.

To make BEV Vance truly work for autonomous driving, beyond data and training, there's also the need for continuous testing and validation. This means putting the systems through countless real-world scenarios and simulations to ensure they behave safely and predictably. It's a constant loop of testing, identifying areas for improvement, and then refining the algorithms. This iterative process, you know, is absolutely essential for building trust in autonomous vehicles and for their eventual widespread adoption.

The ongoing development of BEV Vance technology involves many different aspects, from figuring out how to get the most accurate overhead view from various sensors to integrating that view into the car's decision-making process. It’s about creating a unified way for the car to "see" and "understand" its surroundings, which helps it make smart choices on the road. This comprehensive approach, you see, is what makes BEV such a promising area for the future of self-driving cars, enabling them to perceive the world with greater clarity and precision.

What is a battery electric vehicle (BEV) – x-engineer.org

What is the difference between BEV vs PHEV vs HEV cars? | Zecar

Bev Electric Vehicle Auto Trader - Vally Phyllis